Archive for the 'Technology' Category

Deploying Firefox and Thunderbird Policies to Prevent auto-updates and Tune Other Features

Long-time Firefox and Thunderbird user here. I’ve tried dozens and dozens of other browsers, including the much lauded Google Chrome, but always come back to Firefox. It’s just much faster, lighter on memory, 100x more feature rich, flexible and more secure than the alternatives. Chrome by comparison, is slow, an extreme memory hog, questionable security model, and lacks any powerful features that I’ve come to user over the years.

I tend to run the latest “Developer” or “Nightly” editions of these tools, and by doing so, I agree to certain constraints (daily, enforced upgrades being one example), but with that sometimes comes product changes that cause new, undiscovered issues, breakage and undefined behavior.

My Thunderbird mail folders for example, go back 20 years and contain well over 200,000 archived and active emails. I’ve purged all of the garbage, junk, unnecessary emails as they come in, being a big proponent of Merlin Mann’s “Inbox Zero” methodology for almost 15 years, but it’s important that mail be available and accessible on-demand. Something that breaks my ability to read an IMAP folder or search across those folders and tags, would not be good.

Enter Policies!

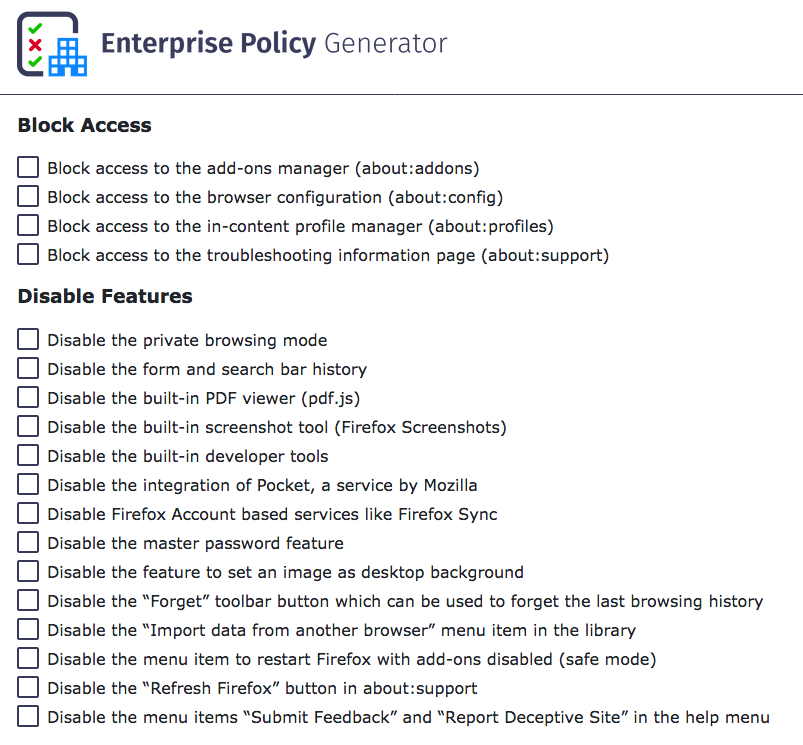

With policies deployed, you can govern what behavior is turned on, off and supported by your Firefox browser or Thunderbird mail client. For Firefox, there’s an easy add-on called “Enterprise Policy Generator” written by Sören Hentzschel that I use to start off the policies I’m interested in. Here’s just a small sample of what’s available in the tool:

Two of the first items I turn off, is the use of “Pocket” and the constantly daily upgrade notices. I do upgrade frequently, but I make sure I back up my profile, add-ons and browser data before testing an upgrade, so I have a means to downgrade if the new version breaks my add-ons or use of the browser. To do that, you can create a policy that disables these with the EPG, or you can just create a policies.json and add the following to it:

{

"policies": {

"DisableAppUpdate": true

}

}This will stop the browser from requesting updates on a daily basis. There is a feature in Firefox under about:config called app.update.auto which can be set to “False”, but it doesn’t work. Likewise, blanking out the app.update.url in the same configuration pane does not work either. The only way to do this, is to deploy a policy that forbids it.

The policies.json file has to go into a specific directory in the application directory, not the user’s profile (where it could be altered or modified by each user). Here’s where those need to go:

On macOS

/Applications/Firefox Developer Edition.app/Contents/Resources/distribution

On Linux

If you’re using packages:

/usr/lib/firefox/distribution

If you’re using the tarball or nightly releases:

/opt/firefox/distribution

On Microsoft Windows

C:\Program Files\Firefox Developer Edition\distribution

The important part is that it lives in a new directory called distribution inside the same directory that holds the main Firefox data files. You’ll need to create this directory if it doesn’t already exist. For Thunderbird, the process is similar, just a slightly different directory:

On macOS:

/Applications/Thunderbird.app/Contents/Resources/distribution

or

/Applications/Thunderbird Daily.app/Contents/Resources/distribution

Follow the same model and paths you did with Firefox for Linux and Microsoft Windows.

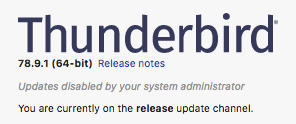

You’ll know if you put the policies.json in the correct directory, if you close and relaunch your Firefox or Thunderbird client, go to Help -> About, and see the following, near the top of the About dialog:

Here is a copy of an expanded policies.json that I use on my production systems:

{

"policies": {

"DisableAppUpdate": true,

"DisableFeedbackCommands": true,

"DisableFirefoxStudies": true,

"DisablePocket": true,

"DisableSystemAddonUpdate": true,

"DisableTelemetry": true,

"ExtensionUpdate": false,

"NetworkPrediction": true,

"Preferences": {

"browser.fixup.dns_first_for_single_words": true,

"browser.tabs.warnOnClose": true

},

"PromptForDownloadLocation": true

}

}You can use this for both Firefox and Thunderbird.

If you want a full breakdown of every possible policy item, you can visit the Mozilla Policy Templates github page for detailed explanations.

While we’re on the subject of Git, you might also want to investigate using Git to manage these policies and configurations, so you can easily deploy them across multiple machines that you use your browser or mail client in.

Hope that helps. Good luck!

Converting SuperMicro BMC Sensor Temperatures from Celsius to Fahrenheit

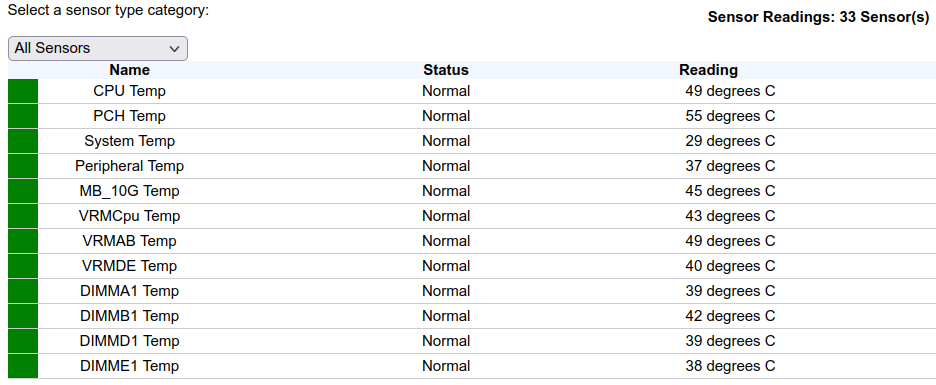

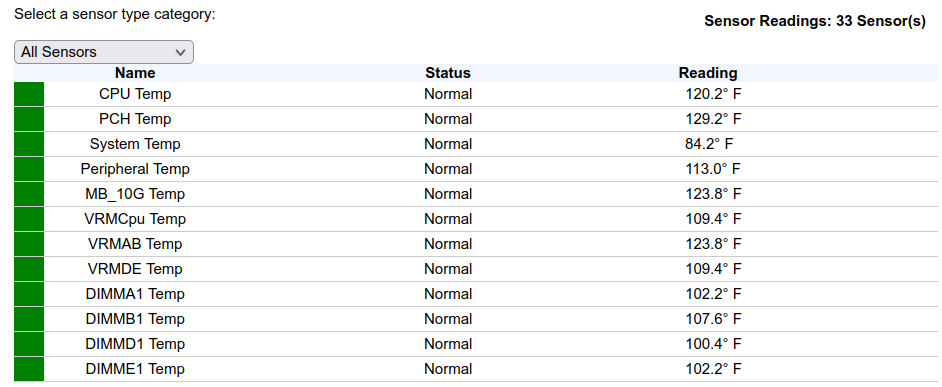

If you’ve ever used a SuperMicro BMC before, you’ve no-doubt seen the temperatures section under Server Health => Sensor Readings. These are always expressed in Celsius, but sometimes you want to quickly convert those to Fahrenheit so you can compare them with other data/sensors.

Enter Tampermonkey! I’ve been using Tampermonkey under Firefox for the last few years to re-skin/re-theme Salesforce, Greenhouse and 1/2 dozen other sites I use, some of them in very extreme ways, adding features and functions that the parent site itself doesn’t have or support.

In this case, this is a very simple snippet that will parse the sensor table and convert the Celsius values to Fahrenheit for you, just by loading the page. The code is:

// ==UserScript==

// @name SuperMicro Sensor Conversion

// @namespace https://192.168.4.50/

// @description Convert the SMC Sensor outputs to Fahrenheit vs. Celsius

// @include /^https?://192.168.4.50/.*$/

// @author setuid@gmail.com

// @version 1.00

// ==========================================================================

//

// ==/UserScript==

'use strict';

setTimeout(() => {

document.querySelectorAll('div[id="HtmlSensorTable"] > table > tbody > tr > td').forEach(node => {

if (node.innerText.includes(' degrees C')) {

var temp = node.innerText.match(/(\d+) \w+ \w/)

var fah = (parseInt(temp, 10) * 9 / 5 + 32).toFixed(1);

}

node.innerText = node.innerText.replace(/(.*?)(\d+) degrees C/, `$1 ${fah}° F)

});

}, 500);

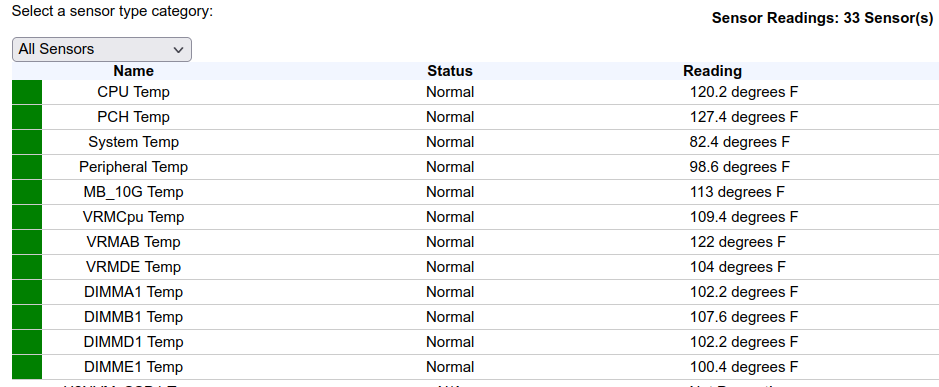

I tuned that a little more, by adding the degree symbol, instead of the words ‘degrees’, which now looks like:

It could be refined even further, targeting the inner iframes that this table resides in, or converting to React, but this was a quick 30-minute hack to solve a specific need I had.

Note, you can also get these same temperature values programmatically, via the RedFish API, if your chassis is properly licensed to permit it.

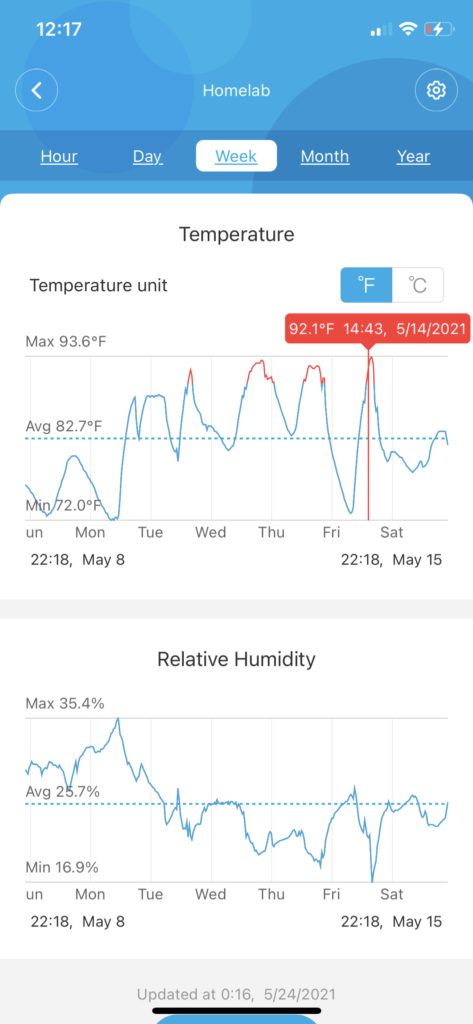

My homelab gets VERY warm during the day when the gear is running at full tilt, so I picked up a Govee Temp/Humidity sensor [Amazon link, not a referral or affiliate link][Govee main website product link], and it’s been very enlightening, showing me more about the trends in my office than I had visibility into before.

Here’s the last week’s temps and humidity in my office/homelab:

The only downside, is I can’t figure out a way to automate pulling/exporting this data, so I can import it into my Prometheus server and graph it with Grafana. Of note: I just taught myself Prometheus + Grafana tonight while adding all of my servers + UPS into it for monitoring. The UPS took a bit more effort, as it’s only using SNMP. I’ll go into more detail on that in future blog posts.

HOWTO: Run Proxmox 6.3 under VMware ESXi with networked guest instances

One of my machines in my production homelab is an ESXi server, a long-toothed upgrade from the 5.x days.

I keep a lot of legacy VMs and copies of every version of Ubuntu, Fedora, Slackware, Debian, CentOS and hundreds of other VMs on it. It’s invaluable to be able to spin up a test machine on any OS, any capacity, within seconds.

Recently, the need to ramp up fast on Proxmox has come to the front of my priority list for work and specific customer needs. I don’t have spare, baremetal hardware to install Proxmox natively, so I have to spin it up under my existing VMware environment as a guest.

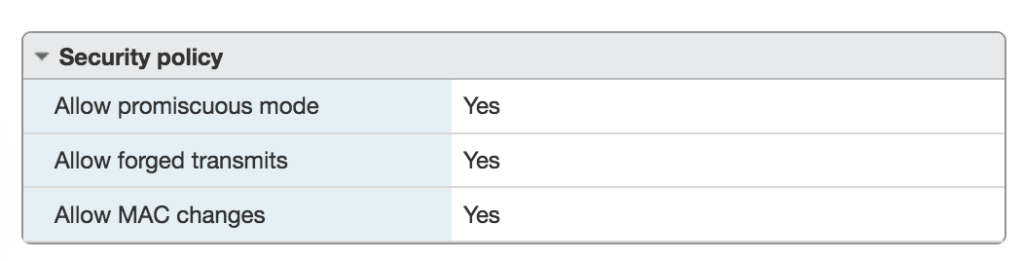

The problem here, is that running one hypervisor under another hypervisor as a guest, requires some specific preparations, so that the networking of the nested guest, will have its packets correctly and cleanly routed through the parent host’s physical network interfaces.

Read on for how to configure this in your own environment!

VMware ESXi

In VMware ESXi, there are a few settings that you need to adjust, to enable “Promiscuous Mode”, “Forged Transmits” and “MAC Changes”. These are found under the “VM Network” section of your ESXi web-ui:

Once you’ve made these changes, you need to restart your VMware host in order to enable them for newly-created VMs under that host.

VMware Workstation

If you’re running VMware Workstation instead of ESXi, you need to make sure your ‘vmnet’ devices in /dev/ have the correct permissions to permit enabling promiscuous mode. You can do that with a quick chmod 0777 /dev/vmnet* or you can adjust the VMware init script that creates these nodes. Normally these would be adjusted in ‘udev’ rules, but those rules are run before the VMware startup, so changes are overwritten by VMware’s own automation.

In /etc/init.d/vmware, make the following adjustment:

vmwareStartVmnet() {

vmwareLoadModule $vnet

"$BINDIR"/vmware-networks --start >> $VNETLIB_LOG 2>&1

chmod 666 /dev/vmnet* # Add this line

}Now that you have your host hypervisor configured to support nested guest hypervisors, let’s proceed with the Proxmox installation.

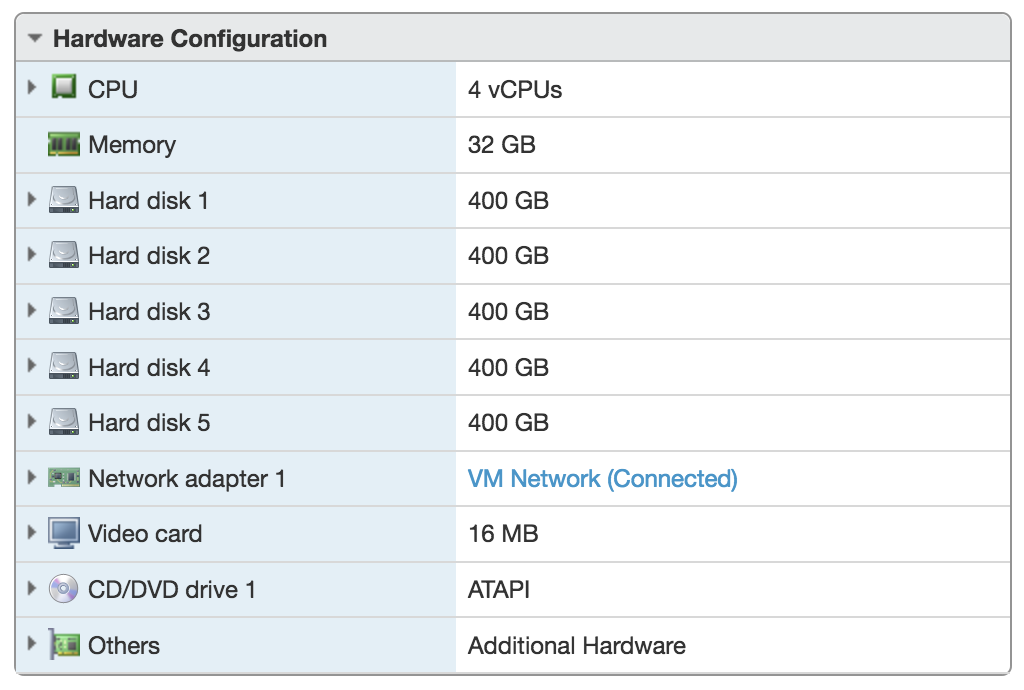

Download the most-recent Proxmox ISO image and create a new VM in your VMware environment (ESXi or Workstation). Make sure to give your newly created VM enough resources to be able to launch its own VMs. I created a VM with 32GB RAM and 2TB of storage, configured as a ZFS RAIDZ-3 array (5 x 400GB disks). That configuration looks like this:

Thoughts about cheating on Zwift

Tags: Cheating, Technology, Zwift- Begin the distribution of Certified Zwift Engineers (aka “ZCE”). These would initially probably be the bike mechanic at your LBS to start with. They’re already there, they have the gear and they’re probably fixing your bike or adding equipment each season already. The ZCE would be able to train up on all aspects of Zwift, including app/game configuration, optimizing the experience for the end user. Oh, you have a Dell laptop with an integrated video card? Here’s some settings you can apply to make that work for you during crowded group events.They’d also be trained in how to configure and validate bike fit, power meters and sensors that tie back to the machine/device used to run Zwift. Having drop-outs? Here’s the tools to identify drop-outs and some workarounds that can help. This engages the LBS mechanics and the LBS itself to be a part of the growing Zwift ecosystem, not only just as an endpoint for bike upgrades and repair, but a full, end-to-end solution for building out a Zwift environment for the riders. Incentivizing those LBS mechanics to become ZCE then has the potential to ensure that more people come into the shop for bike fit, possible recommendations, upgrades, etc. I haven’t met a single bike mechanic who hates cycling. They do it because they have a passion for it, and they, like others, want to grow that passion. Who wouldn’t turn down the ability to learn something new and exciting about your passion?

- Those same LBS that have their mechanics certified as ZCE, can now brand their shop as “Zwift Certified Training Center”, and teach riders how to use Zwift (ala spin class? LBS Fondo?). Tactics, when to drain your power-up so you can pull the next one over that hill. Buying a trainer at Best Buy won’t have the same overall value as buying it at your Zwift Certified LBS, even if Best Buy has them for 10% cheaper.

- Those same LBS + ZCE, can now perform equipment certification and qualification. They can properly calibrate your Power Meter + trainer combination, regardless of what you’re using. Forget trusting Qalvin on your iOS device to calibrate your Quarq PM or trusting your Garmin Vector pedals to be accurate out of the box, let the ZCE at your LBS (ZBS?) handle that for you.

The Enormous Dating Fraud: Match.com, Plenty of Fish, Tinder and OkCupid

The Top 4 dating sites out there; Match.com, Plenty of Fish, Tinder and OkCupid are so completely overrun with fraud now, it’s appalling.

The Top 4 dating sites out there; Match.com, Plenty of Fish, Tinder and OkCupid are so completely overrun with fraud now, it’s appalling.

Note: Match.com, Plenty of Fish, Tinder and OkCupid are all owned by the same parent company, along with roughly 40 other dating site properties.

I’ve been a free and paid member of these sites for 8 years (with 3 years off in the middle as I was dating someone). I have spent hundreds of hours pouring through profiles, code, APIs, mobile apps and other interactions with these specific sites.

Right from the top, I’m going to stronly suggest you do not give any of these sites your money! Do not subscribe, do not give them a credit card, do not let them bill you, do not give them a single dollar. None.

I’ll break down exactly why below..

Let’s start with the biggest and worst offender: Match.com:

Read the rest of this entry »

HOWTO: Back up your Android device with native rsync

Recently, one of my Android devices stopped reading the memory card. Opening the device, the microSD card was so hot I couldn’t hold it in my hand. The battery on that corner of the device had started to swell slightly. I’ve used this device every day for 3+ years without any issues. Until this week.

I also use TitaniumBackup to back up my Android to this external memory card, but since the device can’t read the card, I can’t back it up to the card.

The card is fine, and works in my other devices, as well as being seen from the desktop. Other, blank microSD card can’t be read in the device and similarly overheat within seconds. It’s bad.

Enter rsync, the Swiss-Army Knife of power, to back up my Android device!

Here’s how:

Read the rest of this entry »

HOWTO: Purge Amazon Echo History with iMacros

This one is quick and easy… Have you ever wanted to go back into your Amazon Echo device and delete the history of all commands you asked Alexa to do for you? All the searches? All the weather requests?

Well, you can… manually from the mobile app, or from the Amazon Alexa Configuration page, but that can take hours, because each card you wan to remove is a minimum of two taps or clicks.

But there’s an even easier way… iMacros!

Load up the iMacros browser extension (Chrome version) (Firefox version) and create a new macro. You can edit it ‘raw’, if you wish, but you want only these lines in your macro:

VERSION BUILD=8970419 RECORDER=FX TAB T=1 URL GOTO=http://alexa.amazon.com/spa/index.html#cards TAG POS=1 TYPE=BUTTON ATTR=TXT:More TAG POS=1 TYPE=SPAN ATTR=TXT:Removecard

Now when you load up the Amazon Alexa Configuration page, you can just launch your macro from iMacros and play it in a loop to progressively delete each and every one of those cards in seconds.

I personally wiped out over 5,000 cards in under 2 minutes with this approach. It works great!

Comment below if you have any luck with it, or modify it in a way that becomes more useful to others.

HOWTO: Run multiple Zwift sessions on the same PC (Windows only)

Many people have asked me to write this up and I’m happy to be the first person to push Zwift this far with multiple, simultaneous sessions.

Many people have asked me to write this up and I’m happy to be the first person to push Zwift this far with multiple, simultaneous sessions.

I can say with confidence that up to this point, I’m actually the only person who has this working correctly without overwriting or clobbering critical logs and data files. Others have tried some hacky methods, but they all result in instability and data loss (see “What does NOT work, and why” below).

I started this quest because I am working on a product design (“Secret Sauce” to be withheld in this HOWTO) that involves running multiple Zwift sessions on a single, 100% wireless PC, with the only wire being the single power cable to the wall. No USB cables, no video cables, no HDMI cables, no network cables.

Let’s get some general housekeeping out of the way first…

Read the rest of this entry »

HOWTO: Fully automated Zwift login on Mac OS X

Quite a few riders on the Facebook Zwift Riders group have expressed an interest in this, so I decided to take a couple of hours, learn AppleScript and knock this out. Done! (if you’re on Windows, you want this other HOWTO instead)

Quite a few riders on the Facebook Zwift Riders group have expressed an interest in this, so I decided to take a couple of hours, learn AppleScript and knock this out. Done! (if you’re on Windows, you want this other HOWTO instead)

What this code does, is allows you to create a single icon that will log you into Zwift, with no human interaction needed. It will put in your email, password, click the “Start Ride” button and away you go!

This also leverages the OS X Keychain to store your Zwift email address and password, so it’s secure, not leaked into the filesystem and is able to be called on by any other apps that might need it (ahem, like… Zwift itself!) :D

So here’s how to get it working…

First, we need to create a separate keychain to store the Zwift credentials. You could store them in the main keychain, but I’m a fan of credential separation, so let’s use that.

Read the rest of this entry »

HOWTO: Enable Docker API through firewalld on CentOS 7.x (el7)

Playing more and more with Docker across multiple Linux distributions has taught me that not all Linux distributions are treated the same.

Playing more and more with Docker across multiple Linux distributions has taught me that not all Linux distributions are treated the same.

There’s a discord right now in the Linux community about systemd vs. SysV init. In our example, CentOS 7.x uses systemd, where all system services are spawned and started.

I am using this version of Linux to set up my own Docker lab host for tire-kicking, but it needs some tweaks.

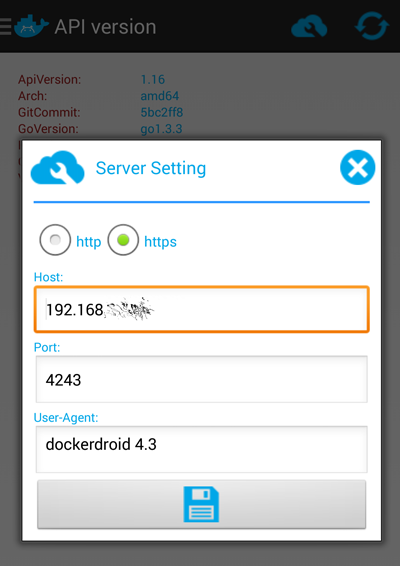

I also wanted to see if I could use the Docker API from my Android phone, using DockerDroid, which (after configuring this) works famously!

Here’s what you need to do:

- Log into your CentOS machine and update to the most-current Docker version. The version shipped with CentOS 7 in the repo as I write this post, is “docker-1.3.2-4.el7.centos.x86_64”. You want to be using something more current, and 1.4 is the latest. To fetch that (and preserve your existing version), run the following:

$ su - # cd /bin && mv /bin/docker /bin/docker.el7 # wget https://get.docker.com/builds/Linux/x86_64/docker-latest -O docker # systemctl restart docker # exit $

Now you should have a working Docker with the right version (current). You can verify that:

$ sudo docker version Client version: 1.4.1 Client API version: 1.16 Go version (client): go1.3.3 Git commit (client): 5bc2ff8 OS/Arch (client): linux/amd64 Server version: 1.4.1 Server API version: 1.16 Go version (server): go1.3.3 Git commit (server): 5bc2ff8

- So far, so good! Now we need to make sure firewalld has a rule to permit this port to be exposed for external connections:

$ sudo firewall-cmd --zone=public --add-port=4243/tcp --permanent $ sudo firewall-cmd --reload success

You can verify that this new rule was added, by looking at /etc/firewalld/zones/public.xml, which should now have a line that looks like this:

<port protocol="tcp" port="4243"/>

- Now let’s reconfigure Docker to expose the API to external client connections, by making sure the OPTIONS line in /etc/sysconfig/docker looks like this (note the portion in bold):

OPTIONS=--selinux-enabled -H fd:// -H tcp://0.0.0.0:4243

- Restart the Docker service to enact the API on that port (if successful, you will not see any output):

sudo systemctl restart docker

- To test the port locally, install telnet and then try telnet’ing to the port on localhost:

$ sudo telnet localhost 4243 Trying ::1... Connected to localhost. Escape character is '^]'. HTTP/1.1 400 Bad Request Connection closed by foreign host.

All looks good so far!

- Lastly, install DockerDroid and configure it to talk to your server on this port:

Now you should be able to use DockerDroid to navigate your Images, Containers and API.

Good luck!