There’s been a lot of talk lately about “the cloud”. We’ve done the cloud before. First we called it “clusters”. Then we called it “grid”. Now we call it “the cloud”. I’m not sure what term marketing will need to call it in a year or two, but one thing is for sure: “cloud sync” is doomed to fail, before it even gets started.

“Cloud sync” is the term used to describe sending your data from various end-user devices (“clients”, usually handheld devices or desktop PIM apps) to the cloud; usually a central server somewhere. Some popular examples of this are:

…and literally dozens of others.

Of these, Funambol, ScheduleWorld and Google Sync are among the most mature, and also among the most problematic (they are based on SyncML).

And every single one of them is failing, because they’re all based on some very broken logic and monolithic designs:

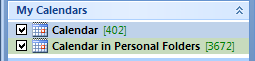

- Not a single one of them supports synchronizing multiple calendars or other data sources while keeping them separate in the cloud’s datastore itself. Without the ability to sync multiple data sources under the same user, the cloud becomes completely useless.

- Not a single one of them understands how to get sync right, without corrupting, duplicating or deleting data. Every single one of them has an issue here.

- None of them are prepared for handling a device they’ve never seen before, or a device which has records that include a field or format they don’t already know about. Everything has to be pre-determined, pre-defined, and that will never scale.

I’ll give you one example, one I’m fighting with every single day. I’ve run this same (very structured) scenario through about two-dozen vendor, free and commercial projects and products, and every single one of them breaks down and fails in one way or another.

- Back up your device, making sure you have a complete copy of the data, in case anything goes wrong (and it will).

- Sync your client device to its native software. In the case of Palm, you’d sync that to Synergy. In the case of Blackberry, you’d sync that to BlackBerry Desktop Manager, and so on.

- Sync that same device to your favorite PIM package. If you’re using an iPhone, sync that to iCal or Microsoft Outlook. If you’re using Linux, sync your device to Kontact or Evolution, if you’re using a BlackBerry you’d sync that to Lotus Notes or Microsoft Outlook.

- Sync this same device to “the cloud” using whatever software is provided by the cloud project you’re synchronizing with. If you’re using ScheduleWorld, use their sync software. If you’re using another, use whatever software they provide.

- Now install the sync tool for your target “cloud” service in your PIM application. If you’re using Microsoft Outlook for example and Funambol, you’d install the “Funambol Outlook Plugin”. Likewise for whatever other PIM and service you choose.

At this point, your client device (your iPhone, BlackBerry, Palm or other handheld) and your PIM device (Microsoft Outlook, Lotus Notes, Evolution, Kontact, etc.) should all contain exactly the same data, and that same data should now be available “in the cloud”.

Now sync your handheld device to the cloud again, and watch what happens. In every single case, without fail, you will get a.) corruption, b.) lost data, or c.) duplicated data.

There have been numerous discussions on dozens of mailing lists about how to get sync to work correctly, and I can say from over a decade of in-depth personal experience with synchronization, that it is downright impossible to have this work correctly, without very aggressive, deep inspection of every single field of every single record, at each sync, once you add more than a 1:1 relationship. The “cloud” concept already implies a many:1 or many:many relationship.

Let me replay it to show what I mean:

- Sync your handheld device to your PIM (usually using a USB connection). Right now, your handheld and your PIM should contain exactly the same data.

- Sync handheld device to the cloud. This creates new records in the cloud which should match your PIM exactly. Now PIM, device and cloud should contain the same data.

- Sync your PIM to the cloud. Depending on the software used, you’ll trash data here. In the case of something like Funambol, you’ll take multiple data sources and merge them into one source in the PIM, creating a mess and lots of duplicates. Other software packages do similar things to the data.

- Sync your handheld device to the cloud again, which will now duplicate and transport that mangled data to the handheld.

There is absolutely no way for the cloud to know that your handheld doesn’t have identical data that the cloud or PIM has, so it must inspect every record at sync time. None of them do this.

You’ll probably hear the terms “slow sync” and “fast sync” in reference to these issues, and I can say with certainty that nobody is doing it 100% correctly.

“Fast sync” is a term used to describe synchronizing one device with one server. Pointers to the last changes on either end are captured, so when you sync again, it only has to send the updates and changes across. Palm handheld devices use a “LastSyncID” on the device to identify the client to the server-side. If the server sees a different incoming client connection, it knows that it isn’t the device it last talked to, so it initiates a “slow sync”.

“Slow sync” is a term used to describe a sync that happens when the server and the client device can’t determine if they’ve “spoken” before, so they send the entire contents of each datastore (calendar, contacts, tasks, memos, etc.) and then compare on a record-by-record basis, to see if there are any changes.

Once you sync more than one device to a server, there is no way you can maintain a “fast sync” relationship with each client.

A == handheld (iPhone, BlackBerry or other)

B == PIM application (Microsoft Outlook, Lotus Notes, Sunbird)

C == "cloud" server

A -> C

B -> C

There is no way for C to know that ‘B’ contains the same data as ‘A’, so it must inspect every record as it arrives, to consolidate the changes, if any are found.

The closest I’ve come to a “perfect solution” is using Intellisync’s backend behind BlackBerry Desktop Manager, but it requires a local (USB or Bluetooth) connection, and requires Microsoft Windows. This is an unacceptable solution to me.

When you sync your BlackBerry to BDM, it does a minimum of 4 separate passes across the data, to compare, inspect and merge any and all changes that may be necessary. If anything has changed or is in conflict, a dialog will be displayed that give you the ability to re-sync, cancel, accept, etc. the changes, as well as inspect them on a change-by-change, field-by-field basis. It does this every single time. There is no “fast sync” capability with BlackBerry Desktop Manager. It does not trust the incoming data by default, nor should it.

But using BDM, there is no way to accept some changes, reject others, merge yet others. It’s an all or nothing solution, and when you have 4,700+ Calendar events as I do for example and a sync process that takes over 20 minutes for each sync pass, it can be quite painful.

Until we develop a way to accept anything the client sends, store it on the server in a unique, per-device datastore, and then aggregate that data in an intelligent way back down to the other requesting clients, “cloud sync” is doomed to fail, before it even gets off the ground.

The current thinking is that we just push the data up to the server(s) (“the cloud”), and the pull it back down to other client or end-devices. That logic is broken, because it isn’t that simple.

If I have a device which supports photos in a Contact record, and I sync to my PIM which does not support photos in the Contact records, and then sync my device to the cloud, and my PIM to the cloud… what happens to the photos? I’ll tell you… they’re lost. Once I sync that PIM to the cloud, the photos are gone, and then when I sync my handheld to the cloud, those (photo-less) records in the cloud are sent to the handheld, trashing all of my local contact photos.

This is just one example, but it proves the point that we can’t continue storing data “in the cloud” in a single, large datastore. Each device is unique, and each device’s way of representing the data is unique. Without keeping that data separate, you’re going to trash, duplicate or lose data.

There are ways to fix this and plenty of bright people working on these problems, but most of the projects I’ve encountered are all so stuck in the dark ages, they refuse to think about ways to solve this that are 100% future-proof, and allow any device with any data representation to be able to cohabitate within the cloud with these other devices and services.

I’ve been toying around with my BlackBerry Bold a bit more since I’ve become more and more reliant upon it for my day-to-day duties (GTD, email, Reuters News, etc.). I migrated my life away from my aging Palm Treo 680 device to this BlackBerry several months ago, and I can’t imagine ever going back.

I’ve been toying around with my BlackBerry Bold a bit more since I’ve become more and more reliant upon it for my day-to-day duties (GTD, email, Reuters News, etc.). I migrated my life away from my aging Palm Treo 680 device to this BlackBerry several months ago, and I can’t imagine ever going back.

Stupid, stupid, stupid. That is the last thing you want to do to help germinate a community of enthusiastic users around your product is to purge them, boot them out and tell them to start over again from the beginning.

Stupid, stupid, stupid. That is the last thing you want to do to help germinate a community of enthusiastic users around your product is to purge them, boot them out and tell them to start over again from the beginning. A

A

Let me just start by saying that I have a lot of data. In multiple places. Some on laptops, some on servers, some on removable drives and mirrored hard disks sitting in a bank vault (yes, really). Lots of data on lots of systems in different states and locations: client data, personal data, work data, community data and lots more.

Let me just start by saying that I have a lot of data. In multiple places. Some on laptops, some on servers, some on removable drives and mirrored hard disks sitting in a bank vault (yes, really). Lots of data on lots of systems in different states and locations: client data, personal data, work data, community data and lots more.